Papers & Notes

...Click this button to get free pdf access until Nov 2015 provided by ACM OpenTOC service.

A Survey on Multi-touch Gesture Recognition and Multi-touch Frameworks

Mauricio Cirelli, Ricardo Nakamura

We present a survey on touch-based gestures recognition techniques and frameworks. We propose an extended set of requirements such techniques and frameworks should meet in order to provide better support to multi-touch applications. Gestures may be described formally or by user-provided examples. Frameworks integrate the multi-touch sensors drivers to the application and may use different gesture recognizers.

ActivitySpace: Managing Device Ecologies in an Activity-Centric Configuration Space

Steven Houben, Paolo Tell, Jakob E Bardram

To mitigate multi-device interaction problems (such as lack of control, intelligibility and context), ActivitySpace provides an activity-centric configuration space that enables users to integrate and work across several devices by using the space between the devices. We report on a study with 9 participants that shows that ActivitySpace helps users to easily manage devices and their allocated resources while exposing a number of usage patterns.

ACTO: A Modular Actuated Tangible User Interface Object

Emanuel Vonach, Georg Gerstweiler, Hannes Kaufmann

ACTO is a customizable, reusable actuated tangible user interface object. Its modular design allows quick adaptations for different tabletop scenarios, making otherwise integral parts like the actuation mechanism or the physical configuration interchangeable. This qualifies it as an ideal research and education platform for tangible user interfaces.

An Empirical Characterization of Touch-Gesture Input-Force on Mobile Devices

Faisal Taher, Jason Alexander, John M Hardy, Eduardo Velloso

An Interaction Model for Grasp-Aware Tangibles on Interactive Surfaces (Note)

Simon Voelker, Christian Corsten, Nur Al-huda Hamdan, Kjell Ivar Øvergård, Jan Borchers

Tangibles on interactive surfaces enable users to physically manipulate digital content by placing, manipulating, or removing a tangible object. However, the information whether and how a user grasps these objects has not been mapped out for tangibles on interactive surfaces so far. Based on Buxton’s Three-State Model for graphical input, we present an interaction model that describes input on tangibles that are aware of the user’s grasp.

BullsEye: High-Precision Fiducial Tracking for Table-based Tangible Interaction

Clemens N Klokmose, Janus B Kristensen, Rolf Bagge, Kim Halskov

BullsEye improves the precision of optical fiducial tracking on tangible tabletops to sub-pixel accuracy down to a tenth of a pixel. Techniques include a fiducial design for GPU based tracking, calibration of light that allows for computation on a greyscale image, and an automated technique for optical distortion compensation.

Characterising the Physicality of Everyday Buttons (Note)

Jason Alexander, John Hardy, Stephen Wattam

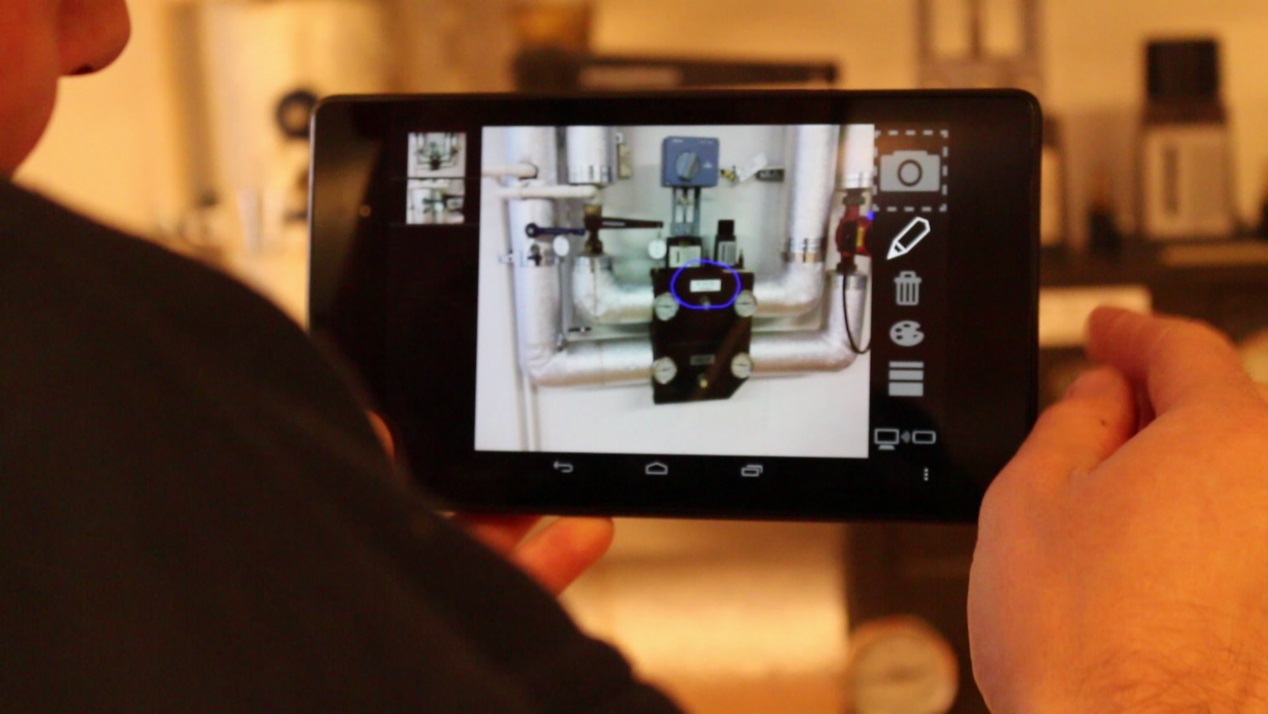

Designing a Remote Video Collaboration System for Industrial Settings

Veronika Domova, Elina Vartiainen, Marcus Englund

This paper presents a remote collaboration system that enables a remote expert to guide a local worker during a technical support task. The system was designed for industrial settings, which introduced specific requirements for the design. To validate the applicability of the system in a real setting, we conducted a user study with operators and field workers that used the system in their working environment in a water treatment plant.

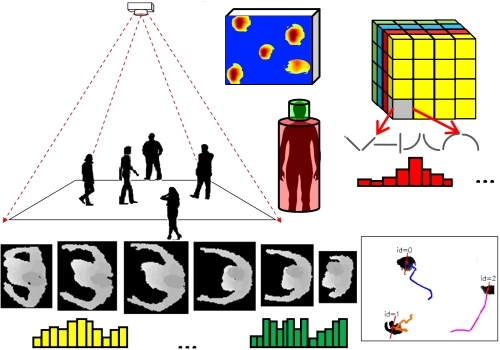

DT-DT: Top-down Human Activity Analysis for Interactive Surface Applications

Gang Hu, Derek Reilly, Mohammed Alnusayri, Ben Swinden, Qigang Gao

We present a novel human tracking and activity analysis approach using a top-down 3D camera. Our hierarchical tracking models local and global affinities, scene constraints and motion patterns to track people in space, and a novel salience occupancy pattern (SOP) is used for action recognition. We validate the tracker using two different interactive surface applications: Proximity Table and My Mother’s Kitchen Exhibit.

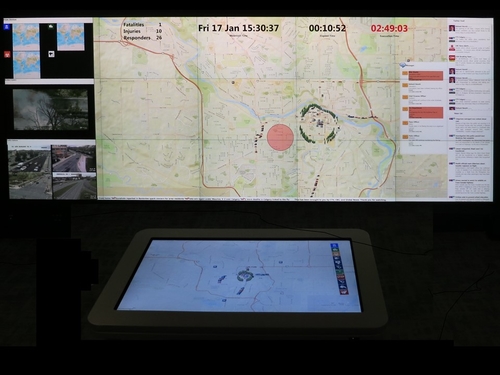

ePlan Multi-Surface: A Multi-Surface Environment for Emergency Response Planning Exercises

Apoorve Chokshi, Teddy Seyed, Francisco Marinho Rodrigues, Frank Maurer

In collaboration with a military and emergency response simulation software company, we developed ePlan Multi-Surface, a multi-surface environment for communication and collaboration for emergency response planning exercises. We describe the domain, how it informed our prototype, and insights on collaboration, interactions and information dissemination in multi-surface environments for emergency operations centres.

Exploring Narrative Gestures on Digital Surfaces

Mehrnaz Mostafapour, Mark Hancock

This work investigates narrative hand gestures used on digital surfaces while interacting with virtual objects to narrate a story. We found that narrative gestures are fundamentally different than traditional gestures used to interact with on-screen objects. Narrative gestures are used to communicate visual meaning to an audience.

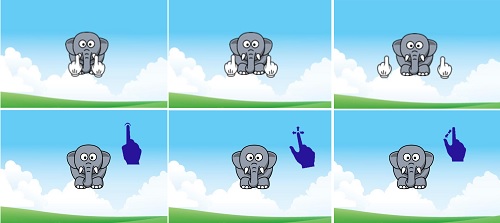

Exploring Visual Cues for Intuitive Communicability of Touch Gestures to Pre-kindergarten Children (Note)

Vicente Nacher, Alejandro Catala, Javier Jaen

In this paper, we evaluate two approaches to communicate three different touch gestures (tap, drag and scale up) to pre-kindergarten users. Our results show, firstly, that it is possible to effectively communicate them using visual cues and, secondly, that an animated semiotic approach is better than an iconic one.

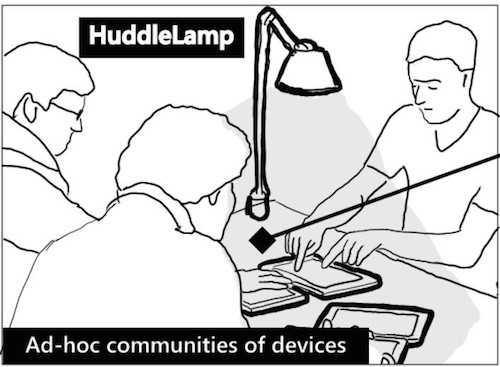

HuddleLamp: Spatially-Aware Mobile Displays for Ad-hoc Around-the-Table Collaboration

Roman Rädle, Hans-Christian Jetter, Nicolai Marquardt, Harald Reiterer, Yvonne Rogers

HyPR Device: Mobile Support for Hybrid Patient Records

Steven Houben, Mads Frost, Jakob E Bardram

The Hybrid Patient Record (HyPR) consists of a paper record, tablet to access the digital record and a mobile mediating sensing platform (HyPR Device) that supports pairing of the tablet and the paper record using proximity sensing, and augments the record with a notification system (color and sound) and indoor location tracking. We report on the user-centered design process and medical simulation in which the HyPR was tested by 8 clinicians.

Improving Pre-Kindergarten Touch Performance (Note)

Vicente Nacher, Alejandro Catala, Javier Jaen, Elena Navarro, Pascual Gonzalez

Pre-kindergarten children have problems with double tap and long pressed gestures. We empirically test specific strategies to deal with these issues. The study shows that the implementation of these design guidelines has a positive effect on success rates, becoming feasible their inclusion in future touch based applications for pre-kindergarten children.

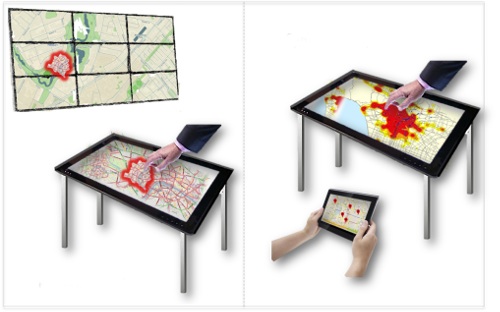

Multi Surface Interactions with Geospatial Data: A Systematic Review

Zahra Shakeri Hossein Abad, Craig Anslow, Frank Maurer

Even though Multi Surface Environments (MSE) and how to perform interactions in these environments have received much attention during recent years, interaction with geospatial data in MSEs is still limited. To summarize the earlier research in this area, this paper presents a systematic review of MSE interactions with geospatial data.

Multi-push Display using 6-axis Motion Platform (Note)

Takashi Nagamatsu, Masahiro Nakane, Haruka Tashiro, Teruhiko Akazawa

NetBoards: Investigating a Collection of Personal Noticeboard Displays in the Workplace

Erroll W Wood, Peter Robinson

NetBoards are situated displays designed to fulfil and augment the role of non-digital personal noticeboards in the workplace. By replacing these with large, networked,touch-enabled displays, we replicate the existing physical systems' flexibility and ease-of-use, while enabling more expressive content creation techniques and remote connectivity.

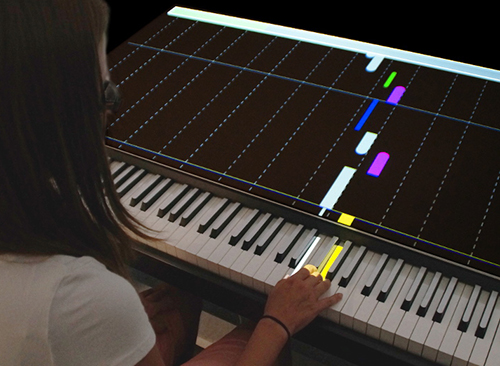

P.I.A.N.O.: Faster Piano Learning with Interactive Projection

Katja Rogers, Amrei Röhlig, Matthias Weing, Jan Gugenheimer, Bastian Könings, Melina Klepsch, Florian Schaub, Enrico Rukzio, Tina Seufert, Michael Weber

We propose P.I.A.N.O., a piano learning system with interactive projection that facilitates a fast learning process. Note information in form of an enhanced piano roll notation is directly projected onto the instrument and allows mapping of notes to piano keys without prior sight-reading skills. We report the results of two user studies, which show that P.I.A.N.O. supports faster learning.

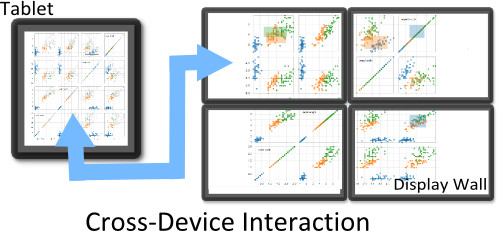

PolyChrome: A Cross-Device Framework for Collaborative Web Visualization

Sriram Karthik Badam, Niklas L. E. Elmqvist

PolyChrome is an application framework for creating collaborative web visualization. The framework supports (1) synchronous and asynchronous collaboration through an API for operation distribution and display management; (2) co-browsing existing webpages; and (3) maintenance of state and input events for managing consistency and conflicts. PolyChrome makes way for creating multi-device ecosystems for ubiquitous analytics over the web.

SleeD: Using a Sleeve Display to Interact with Touch-sensitive Display Walls

Ulrich von Zadow, Wolfgang Büschel, Ricardo Langner, Raimund Dachselt

We present SleeD, a touch-sensitive Sleeve Display that facilitates interaction with multi-touch display walls. We discuss design implications and propose techniques for user-specific interfaces, personal views and data transfer. We verified our concepts using two prototypes, several example applications and an observational study.

Spatial Querying of Geographical Data with Pen-Input Scopes

Fabrice Matulic, David Caspar, Moira Norrie

This work presents pen-based techniques to annotate maps and selectively convert those annotations into spatial queries to search for POIs within explicitly input or implicit scopes (areas and paths). We further provide pen gestures to calculate routes between multiple locations. A user evaluation confirms the potential of our techniques.

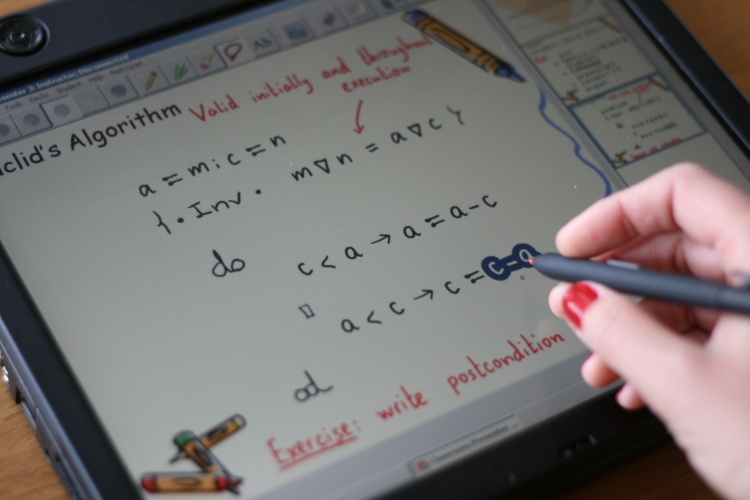

Structure Editing of Handwritten Mathematics - Improving the Computer Support for the Calculational Method

Alexandra Mendes, Roland Backhouse, Joao F. Ferreira

We present the first structure editor of handwritten mathematics oriented to the calculational mathematics involved in algorithmic problem solving. The editor provides features that allow reliable structure manipulation of mathematical content, including structured selection of expressions, the use of gestures to manipulate formulae, and definition/redefinition of operators in runtime.

Supporting Situation Awareness in Collaborative Tabletop Systems with Automation

Y.-L. Betty Chang, Stacey D. Scott, Mark Hancock

In collaborative complex domains, while automation can help manage complex tasks and rapidly update information, operators may be unable to keep up with the dynamic changes. To improve situation awareness in co-located environments on digital tabletop computers, we developed an interactive event timeline that enables exploration of historical system events, using a collaborative digital board game as a case study.

Surface Ghosts: Promoting Awareness of Transferred Objects during Pick-and-Drop Transfer in Multi-Surface Environments

Stacey D. Scott, Guillaume Besacier, Julie Tournet, Nippun Goyal, Michael Haller

Surface Ghosts virtual embodiments provide user feedback during Pick-and-Drop style cross-device transfer in a tabletop-centric multi-surface environment. Surface Ghosts take the form of semi-transparent “ghosts” of transferred objects displayed under the “owner’s” hand on the tabletop surface during transfer.

The Effects of View Techniques on Collaboration and Awareness in Tabletop Map-Based Tasks

Christophe Bortolaso, Matt Oskamp, Greg Phillips, Carl Gutwin, Nicholas Graham

We report on two studies to understand the effect of view techniques on collaboration and awareness. We first studied the performance of view-techniques on spatial arrangement to understand by how much different view configuration affect group performance in mixed-focus tasks. Then we studies how do people choose to configure their environment when working in a realistic mixed-focus activity.

The Usability of a Tabletop Application for Neuro-Rehabilitation from Therapists' Point of View

Mirjam Augstein, Thomas Neumayr, Irene Schacherl-Hofer

This paper describes a study that has been conducted to evaluate selected fun.tast.tisch. (a tabletop system for neuro-rehabilitation) modules. The target group usually involves patients (most of them incurred acquired brain injury) and therapists. The study described here focuses on the usability of the system from therapists’ point of view.

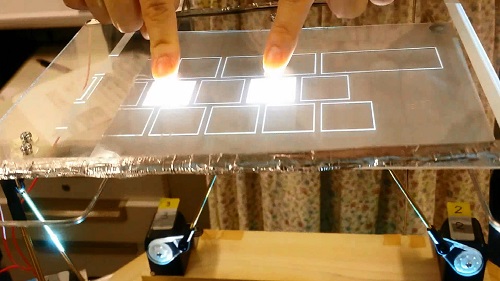

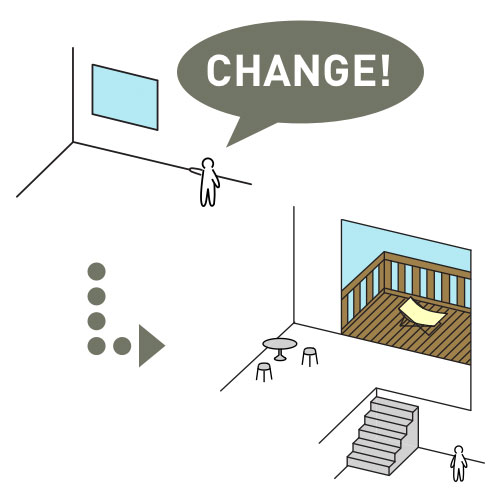

Towards Habitable Bits: Digitizing the Built Environment

Yuichiro Takeuchi

This paper identifies an emerging trend of technical research aimed at the "digitization of architectural space", and describes the range of contributions the HCI community can make in this domain. Opens up a new research field with relevance not only to HCI but also to architecture and urban design.

Uminari: Freeform Interactive Loudspeakers

Yoshio Ishiguro, Ali Israr, Alex Rothera, Eric Brockmeyer

User-defined Interface Gestures: Dataset and Analysis

Daniela Grijincu, Miguel A Nacenta, Per Ola Kristensson

We present a video-based gesture dataset, a taxonomy, and a methodology for annotating video-based gesture datasets. We design and use a crowd-sourced classification task to annotate the videos. The results are made available through a web-based visualization that allows researchers and designers to explore the dataset.

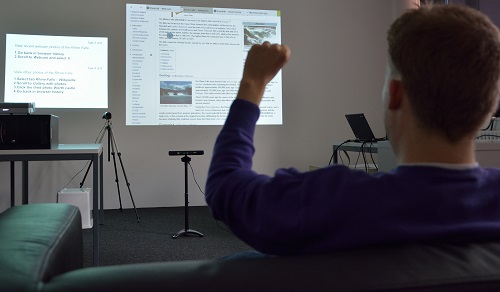

Web on the Wall Reloaded: Implementation, Replication and Refinement of User-Defined Interaction Sets

Michael Nebeling, Alexander Huber, David Ott, Moira Norrie

This paper replicates Morris's Web on the Wall guessability study first using Wizard of Oz for multimodal interaction elicitation around Kinect. To obtain reproducible and implementable user-defined interaction sets, the paper argues for extending the methodology to include a draft of the system for mixed-initiative elicitation with real system dialogue.